My name is Joel Vargas, and I spent two decades looking for a title that made sense for this post or this issue. In the end, nothing fit better than the reality I’ve witnessed across 150 cities: for most of the public sector—and police departments in particular —the data journey is a nightmare.

While a handful of agencies have made significant leaps, I estimate that 80% to 90% of U.S. police departments still rely on 1990s-era technology and workflows to make 2026-era decisions.

The Broken Assembly Line

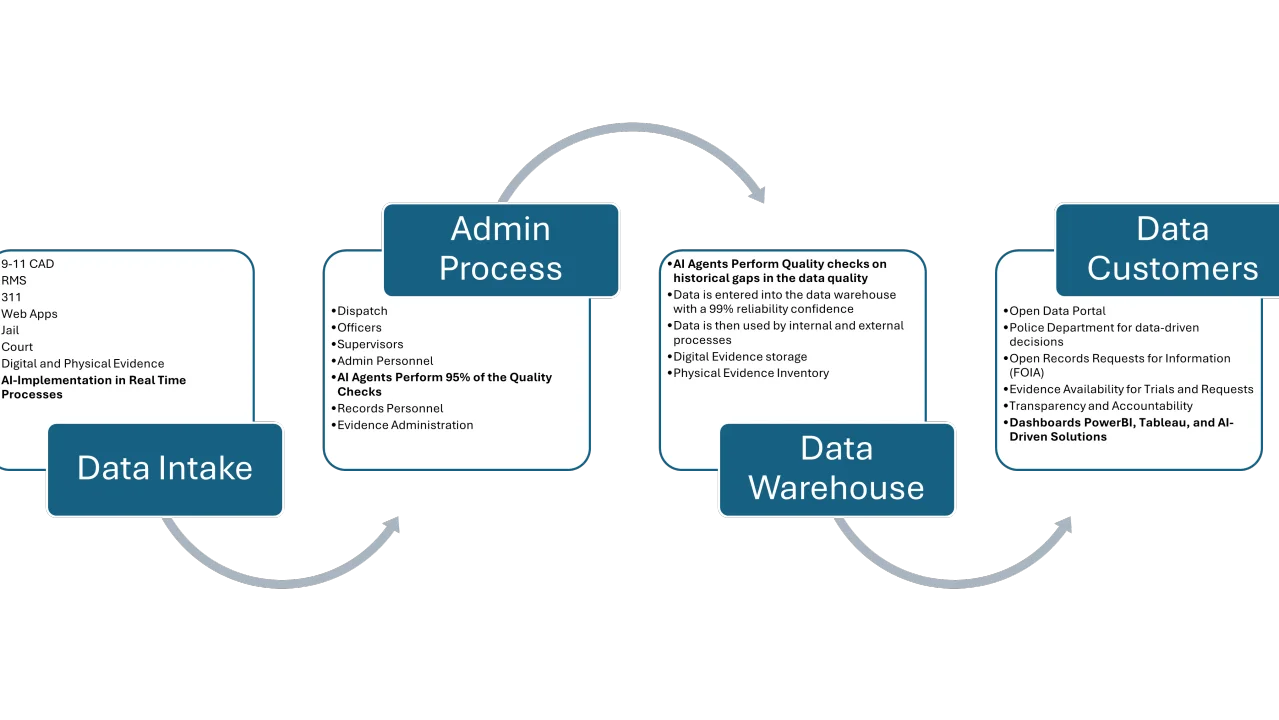

Think of data like a car on an assembly line. If the frame is welded incorrectly at the start, the finished vehicle will never be safe. In policing, the “assembly” looks like this:

- The Intake: An officer on the street writes a report.

- The First Failure: A supervisor fails to conduct a quality review at the first level.

- The Records Gap: Reports head to the Records Department. If the data isn’t aligned, the staff—often overwhelmed—lack the time to send it back for correction.

- The Warehouse: This “dirty data” is dumped into a warehouse, where the public, elected officials, and command staff expect to consume it for “data-driven” decisions.

When this failure happens thousands of times a month, you aren’t just looking at a few typos; you are looking at a systemic collapse of institutional intelligence.

The Result: “Ghost” Data

The consequences are more than just administrative; they are operational.

Take a simple Call for Service. If an incident occurs at a specific intersection but the report isn’t corrected to reflect that location, the data point defaults to the police station or a hospital. Suddenly, your heat map shows a “crime spike” at the precinct itself. Analysts then spend 80% of their time manually “fixing” dots on maps rather than identifying real criminal trends.

Accepting this as the “status quo” doesn’t just hinder transparency—it makes modern policing impossible.

Turning the Horror Story into a Success Story

Fixing this doesn’t necessarily require a multi-million dollar software overhaul. It requires accountability and the “Assembly Line” mindset.

- Stop the Line: Just like a car factory, you must tackle the intake. If the data is wrong at the start, it should not move forward.

- Empower Records: Give your records team a dedicated “correction gap” (e.g., 7 days) to ensure all links and information are properly validated before ingestion.

- Monitor the Failure Rate: Create internal dashboards—not for the public, but for leadership—to identify which shifts or units are producing the highest rates of “dirty data.”

- Training Over Technology: Resistance and sabotage are common when changing workflows. Leadership must stay firm. Often, you don’t need new staff; you need to retrain the dedicated people you already have.

A Diagnostic for Leadership

If you aren’t sure where your department stands, ask yourself these five questions:

- The 3-Year Rule: Have you been waiting more than 3 years for your personnel to “catch up” to your current technology? If so, you need external help.

- The Transparency Test: Is your data clean enough to be presented to the public daily? If the answer is no, your quality checkpoints are failing.

- The Spend vs. Success Ratio:Are you spending a fortune on data management but still failing to produce actionable insights?

- The Software Trap:Do you think a new CAD or RMS will fix the problem? (Hint: It probably won’t if the input process remains broken).

- The AI Readiness Gap: How far are you from adopting AI or advanced visualization? If you are still living in Excel or basic Google Earth, you are falling behind the curve.

The Path Forward

Transitioning from a “horror story” to a “love story” with your data is a matter of determination. It requires identifying gaps, holding the “assembly line” accountable, and refusing to accept dirty data as inevitable.

The ball is in your court. You can continue to manage by guesswork, or you can fix the line and finally see the real picture of your community.

Learn More About How SoundThinking Supports Technical Innovation