Artificial Intelligence is rapidly becoming a tool in law enforcement, from evidence-based policing algorithms to investigative chatbots. With this growth comes valid concerns about bias, transparency, fairness, and due process in AI-driven policing. How can agencies harness AI’s benefits for public safety while upholding civil liberties and community trust? This post examines the ethical use of AI in policing and best practices to ensure responsible deployment.

Recognizing the Risks: Bias, Transparency, and Due Process

- Algorithmic Bias: One major concern is that AI systems could reinforce or amplify biases present in historical policing data. If the training data reflects biased policing (e.g., over-policing certain neighborhoods or demographics), the AI may perpetuate those patterns. Bias can creep in through seemingly technical choices – from which crimes the AI is tasked to predict, to how data is sampled, and which features are used. For example, if an AI model heavily relies on arrest data, it might direct more attention to communities that were already over-surveilled, creating a feedback loop of bias. The ethical mandate is to prevent discriminatory outcomes, ensuring AI doesn’t unfairly target groups or locations. This requires careful bias testing and ongoing audits of AI tools.

- Transparency and Explainability: Police AI systems – whether for predictive policing or automated decision support – often use complex algorithms that are not easily understood by the public or even officers. This opacity can erode trust. When an AI flags a person or area as “high risk,” it’s essential to explain why. Otherwise, AI adds a “layer of mystery” to decision-making. Lack of transparency makes it hard for non-experts to know why the AI made a recommendation. This directly impacts accountability: citizens and oversight bodies need to be able to scrutinize AI-driven decisions. If an officer acts on an AI tip, both the officer and the community should understand the basis of that tip. Agencies must demand explainable AI from vendors and maintain documentation on how models work and are tested.

- Fairness and Due Process: In policing, protecting civil rights is paramount. AI predictions should never be used as sole justification for stops, arrests, or sentencing – doing so would bypass the legal standards of reasonable suspicion or probable cause. As experts note, an AI labeling a location “high risk” does not change the threshold for constitutional policing in that area. Departments must treat AI as advisory, not a deterministic judge. Due process requires that individuals are assessed on evidence and law, not just algorithmic inference. This means any AI-provided lead must be corroborated by human investigators. Courts and defense attorneys are also beginning to scrutinize AI evidence; transparency in how an AI reached its output is crucial for it to hold up in legal proceedings.

SoundThinking’s Approach to Ethical AI

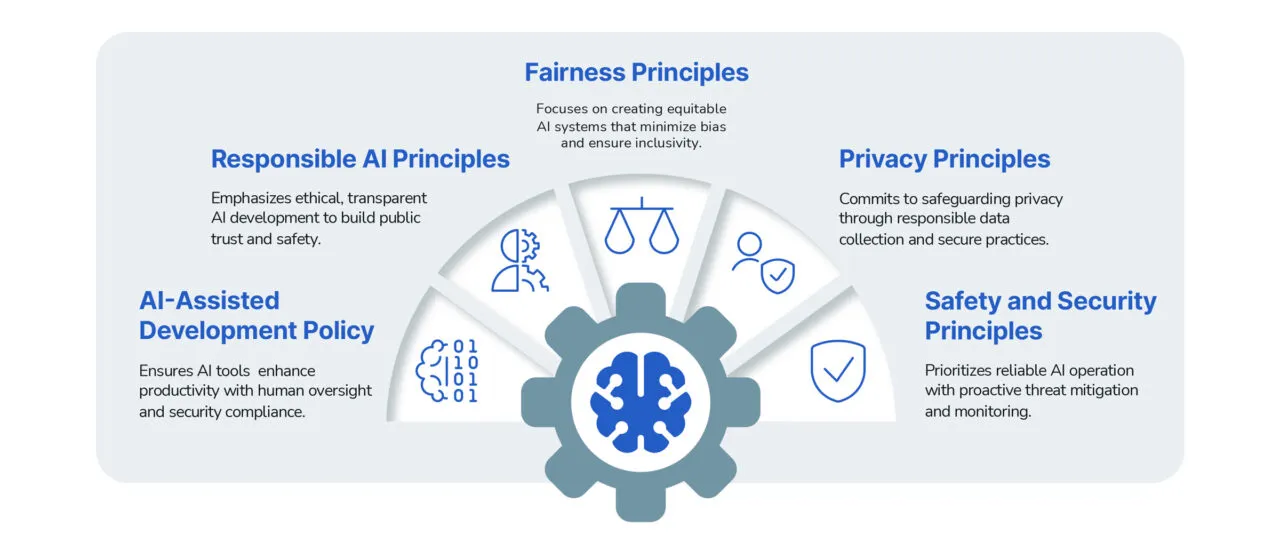

Leading public safety technology firms like SoundThinking have recognized these concerns and built ethics into their AI development process. SoundThinking states that its use of AI is governed by internal policies, bias evaluations, audits, human oversight, and transparency. In practice, this means every AI feature undergoes bias testing and review, and there are mechanisms to catch and correct biased outcomes. The company’s AI principles emphasize robust governance and documentation for every model, covering how it was trained, tested, and what its known limitations are. By embedding governance and fairness checks “directly into the AI stack,” they aim to ensure their systems solve crimes justly and transparently.

SoundThinking’s framework involves human-in-the-loop oversight at critical decision points. As a rule, final decisions remain with trained officers or investigators, not the AI alone. This aligns with a core principle: AI in policing is a force multiplier, not a replacement for human officers. Human officers provide context, judgment, and community understanding that an algorithm lacks. Ensuring an officer reviews AI outputs before action is taken adds a layer of accountability and common-sense check.

- Architecting for Fairness: Mitigating bias is an explicit design goal. For instance, data scientists exclude data features that correlate strongly with race or ethnicity (like certain neighborhood socioeconomic factors). SoundThinking’s ResourceRouter™ product provides one example: it focuses on citizen-reported crime types in its patrol guidance model, rather than enforcement-driven data, to avoid skewing toward over-policed offenses. Such design choices – informed by diverse stakeholder input and community feedback – help “architect against bias” rather than simply trying to fix it after the fact.

Ensuring Responsible Deployment and Oversight

Ethical AI use doesn’t end at model development; it extends to how agencies deploy and govern these tools in the field. Comprehensive audits are a best practice: law enforcement agencies and tech firms should regularly audit AI systems for bias or error, and openly publish the results. This transparency helps build public confidence. Partnering with outside experts or community representatives to review AI outcomes can also ensure accountability.

Equally important is establishing clear usage policies and protocols for human oversight. Agencies deploying AI for policing should formalize guidelines on what the AI will and will not be used for, how officers are to incorporate AI outputs, and how to handle disagreements between an AI suggestion and an officer’s intuition or evidence. SoundThinking’s policies, for example, mandate that every AI-driven action remain traceable and auditable by humans. Police departments can mirror this by creating audit trails for AI-informed decisions (e.g., logging why an AI alert was or wasn’t followed).

- Training and Culture: To use AI responsibly, officers and leadership need training not just on the tool’s interface, but on its ethical and legal implications. Regular training can cover understanding AI limitations, avoiding over-reliance on the system, and recognizing potential biases. Leadership should encourage a culture where personnel feel comfortable questioning or overriding AI recommendations if they seem off-base. This human judgment is a safety net against AI errors. Moreover, involving officers in the AI implementation process (through feedback sessions, pilot programs, etc.) can improve the system’s design and the trust users place in it.

Balancing Public Safety Benefits with Civil Liberties

Ethical use of AI in policing requires a proactive, policy-driven approach that anticipates issues before they arise. When implemented with care, AI has significant upside for public safety. It can analyze vast datasets in seconds, uncover crime patterns or suspects that humans might miss, and free up officers’ time from tedious tasks. For example, automated report writing or smart search tools can save hours of manual work, allowing officers to spend more time in the community. Indeed, used well, AI can both reduce crime and strengthen community trust by enabling more effective and unbiased policing.

However, those public safety gains will be moot if the community doesn’t trust the technology. Hence, responsible deployment means engaging the community, being transparent about what tools are used, what data they rely on, and what safeguards are in place. It also means continuously evaluating outcomes: Are arrest rates, use-of-force incidents, or community complaints indicating any disparity since AI adoption? Agencies must be ready to pause or adjust AI systems if they are not meeting fairness and accuracy expectations. The goal is to use AI as a trusted assistant that enhances investigations and decision-making, while unwaveringly upholding the principles of justice and equality that are the foundation of policing. By combining rigorous internal controls (bias audits, oversight, transparency) with strong external accountability (community engagement and legal compliance), law enforcement can harness AI’s benefits safely and fairly. With this careful stewardship, agencies can leverage AI to improve public safety and public trust – a balance that is not only possible, but imperative.

Contact us to Learn More about SoundThinking’s SafetySmart Platform